Introduction

This page reports benchmarks that are available online. If you are aware of some missing benchmark, please report this to us at pnweb@petrinet.net.

Below are the public benchmarks brought to our knowledge.

The Model Checking Contest Benchmark Suite

This benchmark suite is provided by the People involved in the organisation of the Model Checking Contest since 2011. These models have a large variety of authors who are referenced.

Models in the Model Checking Contest Benchmark Suite

The benchmark proposed for the Model Checking Contest (MCC) consists of P/T nets (1-bounded, k-bounded, unbounded), and coloured Nets (1 and k-bounded per colour, with and without non-equal guards on transitions, with and without cartesian product on colours, with and without successor/predecessor functions).

For a number of P/T nets, additional information about the concurrent structure of these nets is provided under the form of a NUPN (Nested-Unit Petri Net) "tool specific" element in the PNML file. This information can be exploited by tools at will. For coloured Petri Nets, equivalent P/T nets are provided in mostcases.

Two classes of models are provided:

- Academic Models: These models were designed in Universities by researchers, in order to benchmark some tools, to illustrate a typical situation, or within the context of academic projects and collaborations.

- Industrial Models: These models were designed within the context of industrial projects.

In 2025, 142 models are available, that makes 1855 instances by expanding their parameters.

Formulas in the Model Checking Contest Benchmark Suite

The models also come with formulas used for some of the MCC competitions. In particular, there are:

- reachability formulas

- CTL formulas

- LTL formulas

Formulas are generated randomly and consolidated to be as complex as possible. Since every year brings new models, and considering that formulas are regenerated every year, some models can be associated with a large number of formulas.

Access to formulas is provided here:

- all formulas for the MCC'2025

- all formulas for the MCC'2024

- all formulas for the MCC'2023

- all formulas for the MCC'2022

- all formulas for the MCC'2021

- all formulas for the MCC'2020

- all formulas for the MCC'2019

- for earlier sets of formulas, please have a look at the result web page of the current year

Formulas are expressed in XML. Some documentation about the XML representation is available in the submission kit. Many tools participating to the Model Checking Contest have shared libraries to parse this format. You may avoid writing a new parser by contacting the developers.

Please note that for each year, a comprehensive report of results is computed in the form of a CSV file : raw-result-analysis.csv. The "majority result" is also reported ; it is almost in every case the correct one (only a few counter examples among hundreds of thousand formulas). Each estimated result is associated with a rating. Here is a direct access to this CSV file for the most recent years:

- the raw-result-analysis.csv in MCC'2025

- the raw-result-analysis.csv in MCC'2024

- the raw-result-analysis.csv in MCC'2023

- the raw-result-analysis.csv in MCC'2022

- the raw-result-analysis.csv in MCC'2021

- the raw-result-analysis.csv in MCC'2020

- the raw-result-analysis.csv in MCC'2019

- the raw-result-analysis.csv in MCC'2018

- the raw-result-analysis.csv in MCC'2017

- the raw-result-analysis.csv in MCC'2016

- this file was not computed in earlier versions of the Model Checking Contest

The VLPN Benchmark Suite

This is a collection of 350 Petri nets (called models) to be used as benchmarks in scientific experiments. These models have the following characteristics:

- they are realistic in the sense they have not been produced randomly, but generated from high-level specifications of meaningful systems

- they are complex as they have been carefully selected in a collection of 10,000 Petri nets by retaining only the largest ones

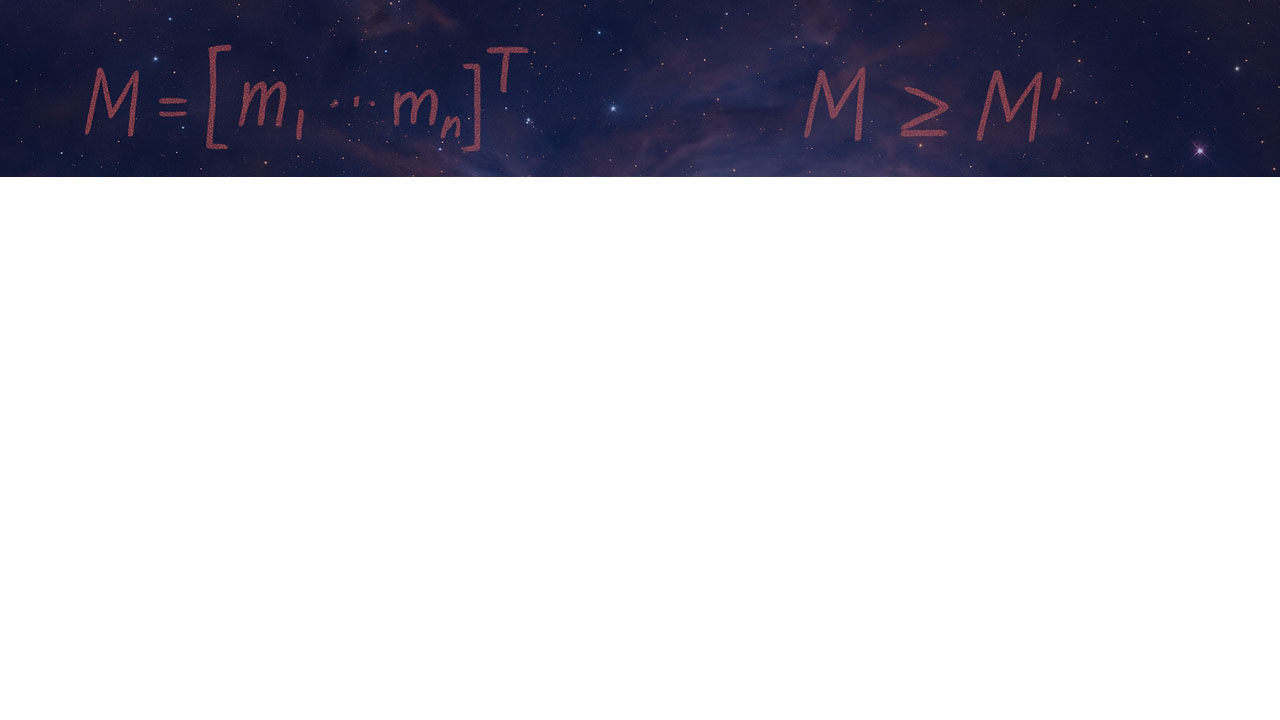

- they are structured as they are given in the form of Nested-Unit Petri nets (NUPNs) [Gar15] , which bring hierarchical structure to Petri nets

Note that some of the models in the VLPN benchmark suite are also located in the Model Checking Contest Benchmark Suite.

This benchmark suite is provided by the CONVECS team (inria), and more precisely, the developers of the CADP tool.